K8s ingress-nginx install

1.ingress安装部署

1 ingress 简介

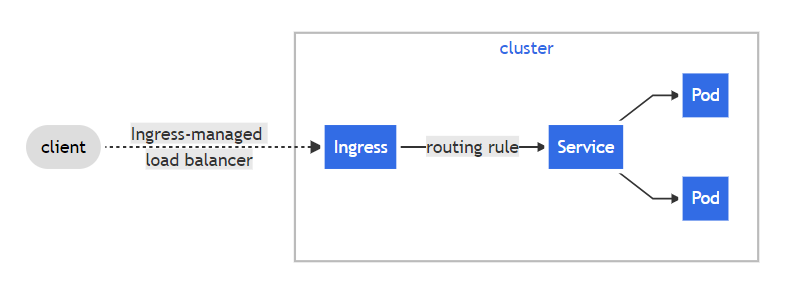

ingress, ingress-controller 是什么?

ingress 公开了从集群外部到集群内部服务的 HTTP 和 HTTPS 路由的规则集合,而具体实现流量路由规则是由 ingress-controller 负责

ingress 是 K8S 中的一个抽象资源,给管理员提供一个暴露应用的入口定义方法。

ingress-controller 组件根据 ingress 生成具体的路由规则,并对 Pod 负载均衡。

ingress-controller 是怎么工作的:

ingress-controller 通过与 K8S API 交互,动态的去感知集群中 ingress 规则变化,然后读取它,按照自定义的规则,规则就是写明了哪个域名对应哪个 service,生成一段 Nginx 配置,应用到管理的 Nginx 服务,然后热加载生效。以此来达到 Nginx 负载均衡器配置以及动态更新的问题。

2 ingress-nginx-controller 控制器部署

Ingress Nginx 有 2 种实现,其一由 Kubernetes 社区提供,另外一个是 Nginx Inc 公司提供的,我们采用是 Kubernetes 官方社区提供的 ingress-nginx

本文所讨论的是都是裸机 K8S 集群的部署方式(自建),根据 K8S 版本来选择需要安装 ingress-nginx 控制器版本;云 K8S 集群建议参考云提供商的文档来操作。

ingress-nginx 与 K8S 集群版本需要参考官网的 README.md 来选择。

这边测试的k8s集群是2.3.10

2.1 选择版本v1.1.1,并执行

1 | cd /data/k8s/ingress |

方式二(这边用的是这个方式部署的,版本比较新)

1 | wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.1.1/deploy/static/provider/cloud/deploy.yaml |

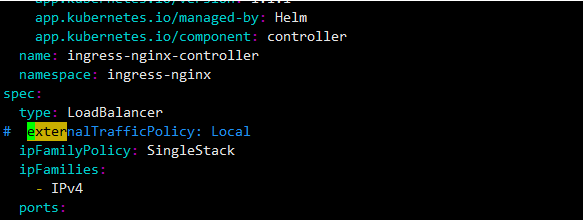

- 修改一 注释掉external Traffic Policy:Local

- 修改二 镜像

1

2

3image: bitnami/nginx-ingress-controller:1.1.2

image: liangjw/kube-webhook-certgen:v1.1.1

image: liangjw/kube-webhook-certgen:v1.1.12.2 获取版本

1

2

3

4

5

6

7

8

9

10

11POD_NAMESPACE=ingress-nginx

POD_NAME=$(kubectl get pods -n $POD_NAMESPACE -l app.kubernetes.io/name=ingress-nginx --field-selector=status.phase=Running -o name)

kubectl exec $POD_NAME -n $POD_NAMESPACE -- /nginx-ingress-controller --version

-------------------------------------------------------------------------------

NGINX Ingress controller

Release: v1.1.1

Build: a17181e43ec85534a6fea968d95d019c5a4bc8cf

Repository: https://github.com/kubernetes/ingress-nginx

nginx version: nginx/1.19.9

-------------------------------------------------------------------------------2.3 配置 ingress-nginx-controller 为默认 ingress 控制器

如果 ingress-nginx-controller 控制器的单个实例是集群中运行的唯一 ingress 控制器,则应在 ingressClass 中添加注释 ingressclass.kubernetes.io/is-default-class,因此任何新的 ingress 对象都将具有此一个作为默认 ingressClass。1

2

3

4

5

6

7

8

9

10

11

apiVersion: networking.k8s.io/v1

kind: ingressClass

metadata:

labels:

app.kubernetes.io/component: controller

name: nginx

annotations:

ingressclass.kubernetes.io/is-default-class: "true" # 增加配置

spec:

controller: k8s.io/ingress-nginx

2.4 容器时区

查看ingress-nginx 日志

1 | kubectl logs ingress-nginx-controller-mdn6r -n ingress-nginx |

找到 Deployment YAML,添加配置 hostPath 类型 volumes

1 | .... |

再次查看时间是对的

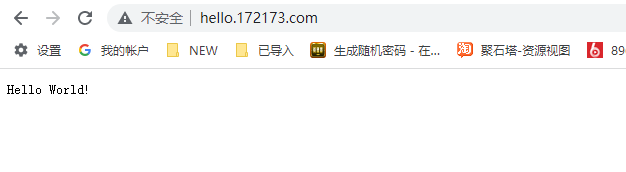

2.5 安装测试应用

1 | cat > ingress-demo-app.yaml << EOF |

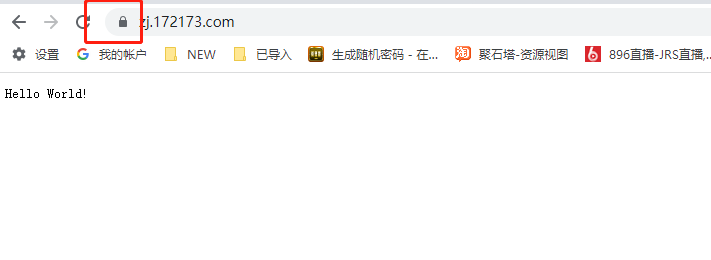

本机绑定host

192.168.100.50 hello.172173.com

2.6 过滤查看ingress端口

1 | [root@hello ~/yaml]# kubectl get svc -A | grep ingress |

3 DeamonSet + nodeSeclector 实现 HA

- deployment + service:方案细节详见官方文档 over-a-nodeport-service

- daemonset + hostNetwork:方案细节详见官方文档 via-the-host-network(推荐)

本文仅讨论更加优秀的方案二。

3.1 hostNetwork: true 通过宿主机网络暴露服务

通过主机网络

nginx-ingress-controller 暴露出集群的目的是为架构前端的接入层负责均衡器(LVS/Nginx)提供 RS。

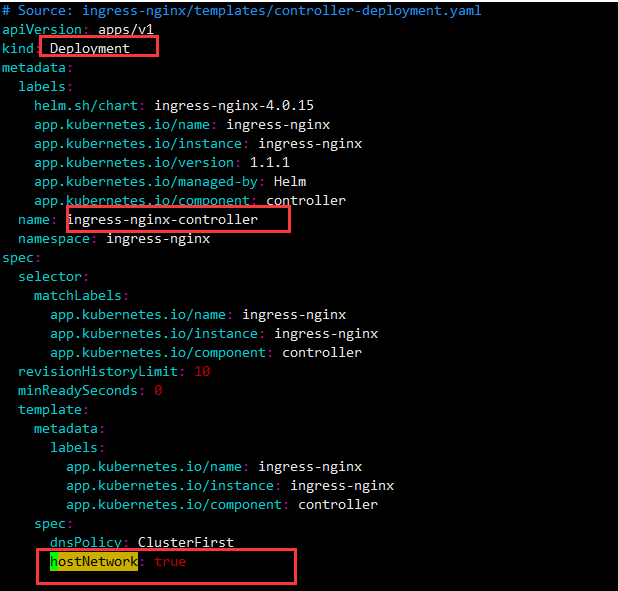

hostNetwork: true 表示当前的 Pod 使用宿主机节点的网络对外提供服务,即直接在宿主机暴露80,443端口。

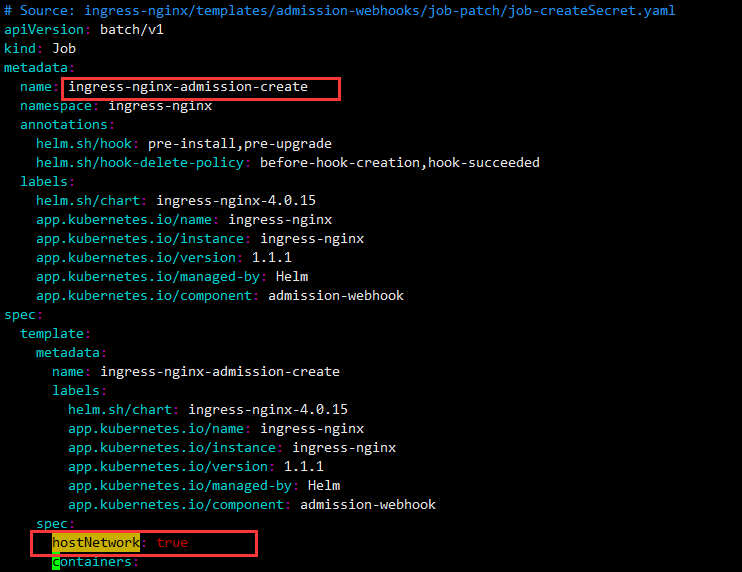

在deployment spec 下增加字段 hostNetwork: true,与 container 同级。

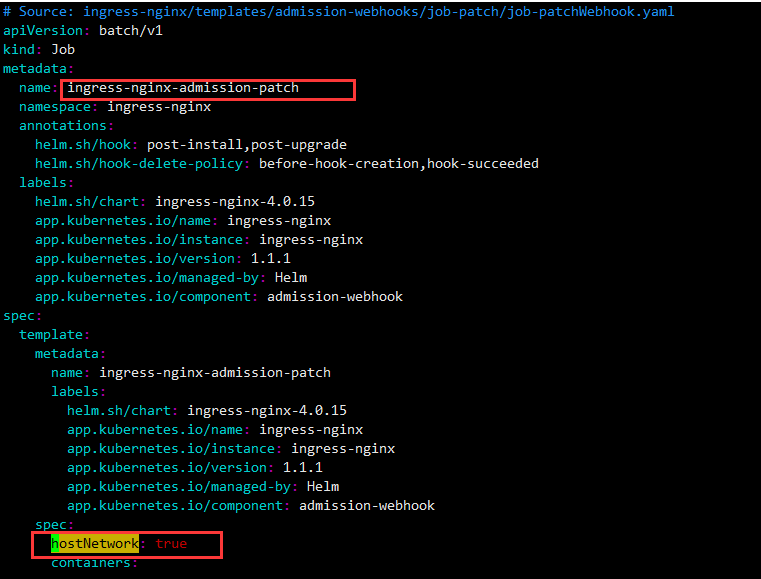

三个地方要修改 hostNetwork

- 1 在deploy.yaml中Ingress-nginx-admission-create的Job资源下 spec.template.spec中添加如下 hostNetwork: true

- 2 在deploy.yaml中 Ingress-nginx-admission-patch的Job资源下 spec.template.spec中添加如下 hostNetwork: true

- 3 在deploy.yaml中 Deployment中spec.template.spec中添加如下 hostNetwork: true

1 | # 所在节点上可以看到 80,443 端口已直接暴露在 node宿主机的网络协议栈。 |

如果使用云服务商的云 K8S 集群,通常使用 Service type=LoadBalancer 将 SLB/ULB 公网的云负载均衡器于 nginx-ingress-controller 进行关联,可以参考 《UCloud nginx-ingress-controller: 0.23.0》

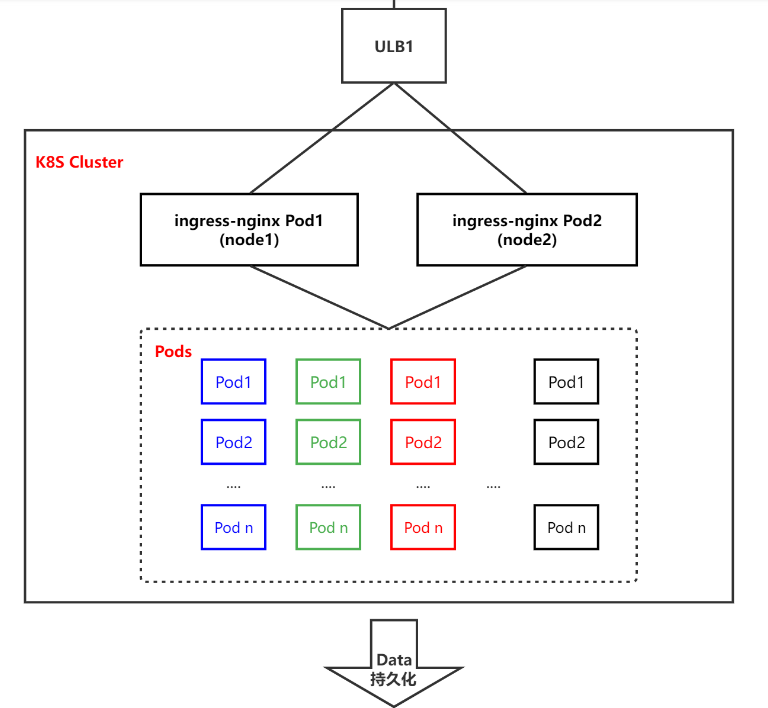

3.2 DeamonSet + nodeSeclector 实现 HA

官方的 yaml 默认使用的是 deployment 控制器,replicas 默认为 1个副本,我们希望 nginx-ingress 要拥有容灾能力,所以需要增加它的副本数。

deployment 的特性会使 Pod 随机分布到某个节点,导致我们把它作为前端负载均衡器的 RS 挂载时会 ip 会变化,所以我们更希望让 nginx-ingress 固定于某几个 node 节点。

未处理之前,ingress 在master03

1 | kubectl get po -A -o wide |

集群中会有非常多的 work node,我们也不想把所有 node 都挂载进负载均衡器,况且所有 node 都启动 nginx-ingress 也会造成资源浪费。

综上所属,可以利用 DeamonSet + nodeSeclector (标签选择器)挑选出几台特定的 node 节点来部署 nginx-ingress,然后将这几台 node 固定作为接入层负载均衡器(LVS/SLB)的后端 RS 提供挂载和流量均摊。

1 | # 为指定 node 创建 ingress-nginx 标签 k8s-node01 与之前hosts 定义名字对应 |

4 默认后端开启,写入配置文件执行

1 |

|

IP 访问

5.ingress 规则

详细信息参考官方文档:

Kubernetes ingress 规则

Kubernetes ingress TLS

Kubernetes ingressClass

Kubernetes ingress DefaultBackend, default-backend

5.1 ingress HTTPS

配置 https ingress 规则

apiVersion: networking.k8s.io/v1

kind: ingress

metadata:

name: web1

namespace: default

spec:

ingressClassName: nginx

rules:

- host: web1.lyc7456.com # 域名

http:

paths:- path: / # /

pathType: Prefix # 匹配规则:基于前缀匹配

backend: # 后端RS,相当于 nginx的 upstream

service: # 服务,对应的是 k8s service

name: web1 # service下对应的CoreDNS 域名

port:

number: 80 # service的端口号5.2 ingress TLS

添加 tls 证书1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19# 命令行+绝对路径添加 secret 资源对象

$ kubectl create secret tls www.lyc7456.com --cert=/root/ssl-key/www.lyc7456.com.crt --key=/root/ssl-key/www.lyc7456.com.key

kubectl create secret tls zj.172173.com --cert=public.pem --key=private.key

# 或通过 yaml 添加 secret 资源对象

---

apiVersion: v1

kind: Secret

metadata:

name: testsecret-tls

namespace: default

data:

tls.crt: base64 编码的 cert

tls.key: base64 编码的 key

type: kubernetes.io/tls

# 查看 secret 资源对象

$ kubectl get secret5.3 配置 tls ingress 规则

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

apiVersion: networking.k8s.io/v1

kind: ingress

metadata:

name: www

spec:

ingressClassName: nginx

tls: # 启用 TLS

- hosts:

- www.lyc7456.com # 域名

secretName: www.lyc7456.com # secret 名称

rules:

- host: www.lyc7456.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: www

port:

number: 80

- path: / # /

5.4 测试

1 | cat > ingress-demo-app-443.yaml << EOF |

绑定hots 测试

5.5 报错

查找的方向其实一开始就是错了

总结经验,一定要先把所有的日志都看一遍。

1 | Error from server (InternalError): error when creating "ingress-demo-app-443.yaml": Internal error occurred: failed calling webhook "validate.nginx.ingress.kubernetes.io": failed to call webhook: Post "https://ingress-nginx-controller-admission.ingress-nginx.svc:443/networking/v1/ingresses?timeout=10s": x509: certificate is valid for kubernetes, kubernetes.default, kubernetes.default.svc, kubernetes.default.svc.cluster, kubernetes.default.svc.cluster.local, not ingress-nginx-controller-admission.ingress-nginx.svc |

应该是第一次配置的配置错误,导致这个没删除,在创建的时候,创建不了,删除重新部署解决。

解决思路1

临时解决,一看就不是很靠谱

删除ingress-nginx-admission

1 | kubectl get validatingwebhookconfigurations |

解决思路2

ingress-nginx-controller-admission.ingress-nginx.svc

1 | CA=$(kubectl -n ingress-nginx get secret ingress-nginx-admission -ojsonpath='{.data.ca}') |

cfssl gencert

-ca=/etc/kubernetes/pki/ca.pem

-ca-key=/etc/kubernetes/pki/ca-key.pem

-config=ca-config.json

-hostname=10.96.0.1,192.168.100.50,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,ingress-nginx-controller-admission.ingress-nginx.svc,192.168.100.45,192.168.100.46,192.168.100.47,192.168.100.48,192.168.100.49,192.168.100.51,192.168.100.52,192.168.100.53

-profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

解决思路3

痛并快乐着,发现解决思路不对,重新看日志。

1 |

|

发现报错

1 | "Error listening for TLS connections" err="listen tcp :8443: bind: address already in use |

原来是ha 我们部署在node1 node2 占用了端口,导致ingress其实一直没部署好,迁移走,从新部署就好了。