Rancher容器云管理平台 Rancher 是企业级Kubernetes管理平台

Rancher 是供采用容器的团队使用的完整软件堆栈。它解决了管理多个Kubernetes集群的运营和安全挑战,并为DevOps团队提供用于运行容器化工作负载的集成工具。

一、主机硬件说明

序号

硬件

操作及内核

1

CPU 4 Memory 4G Disk 100G

CentOS7

二、主机配置 2.1 主机名 2.2 IP地址 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root@rancherserver ~] TYPE="Ethernet" PROXY_METHOD="none" BROWSER_ONLY="no" BOOTPROTO="none" DEFROUTE="yes" IPV4_FAILURE_FATAL="no" IPV6INIT="yes" IPV6_AUTOCONF="yes" IPV6_DEFROUTE="yes" IPV6_FAILURE_FATAL="no" IPV6_ADDR_GEN_MODE="stable-privacy" NAME="ens33" UUID="ec87533a-8151-4aa0-9d0f-1e970affcdc6" DEVICE="ens33" ONBOOT="yes" IPADDR="192.168.10.130" PREFIX="24" GATEWAY="192.168.10.2" DNS1="119.29.29.29"

2.3 主机名与IP地址解析 1 2 3 4 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.10.130 rancherserver

2.4 主机安全设置 2.5 主机时钟同步 1 2 0 */1 * * * ntpdate time1.aliyun.com

2.6 关闭swap

关闭k8s集群节点swap

2.7 配置内核路由转发 1 2 3 4 5 6 7 ... net.ipv4.ip_forward=1 net.ipv4.ip_forward = 1

三、docker-ce安装

所有主机安装docker-ce

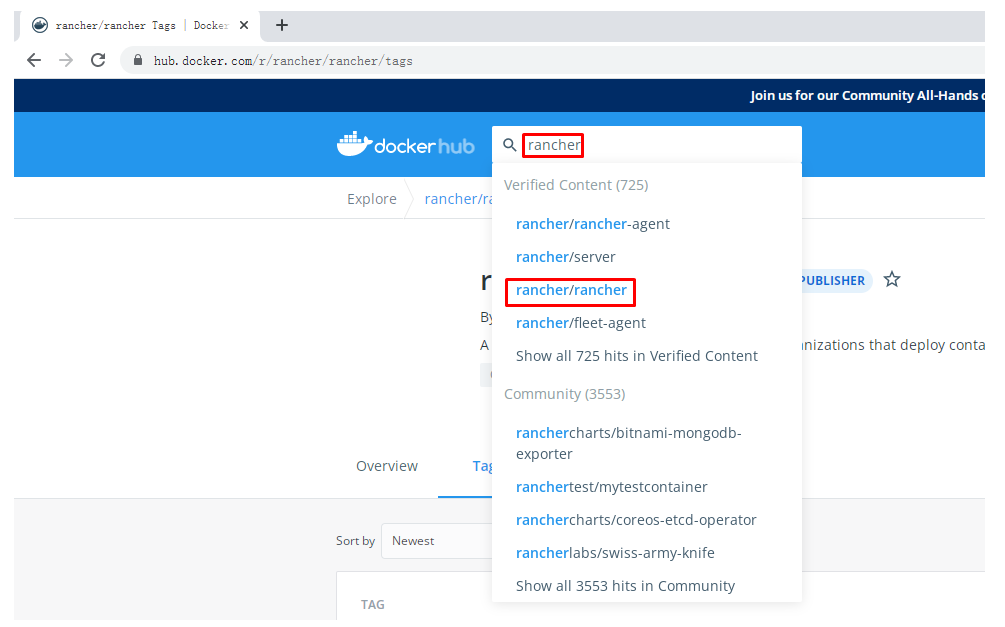

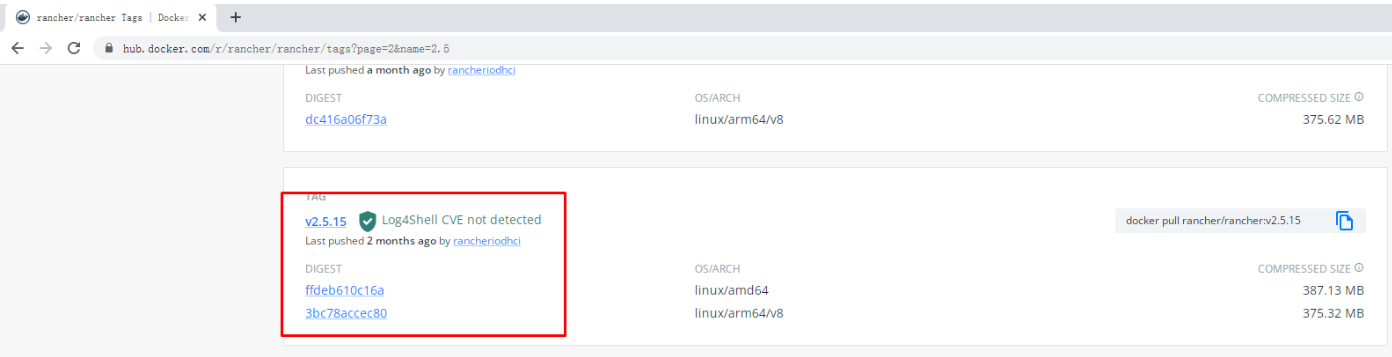

四、rancher安装

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@rancherserver ~] [root@rancherserver ~] [root@rancherserver ~] [root@rancherserver ~] [root@rancherserver ~] CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 99e367eb35a3 rancher/rancher:v2.5.15 "entrypoint.sh" 26 seconds ago Up 26 seconds 0.0.0.0:80->80/tcp, :::80->80/tcp, 0.0.0.0:443->443/tcp, :::443->443/tcp rancher-2.5.15

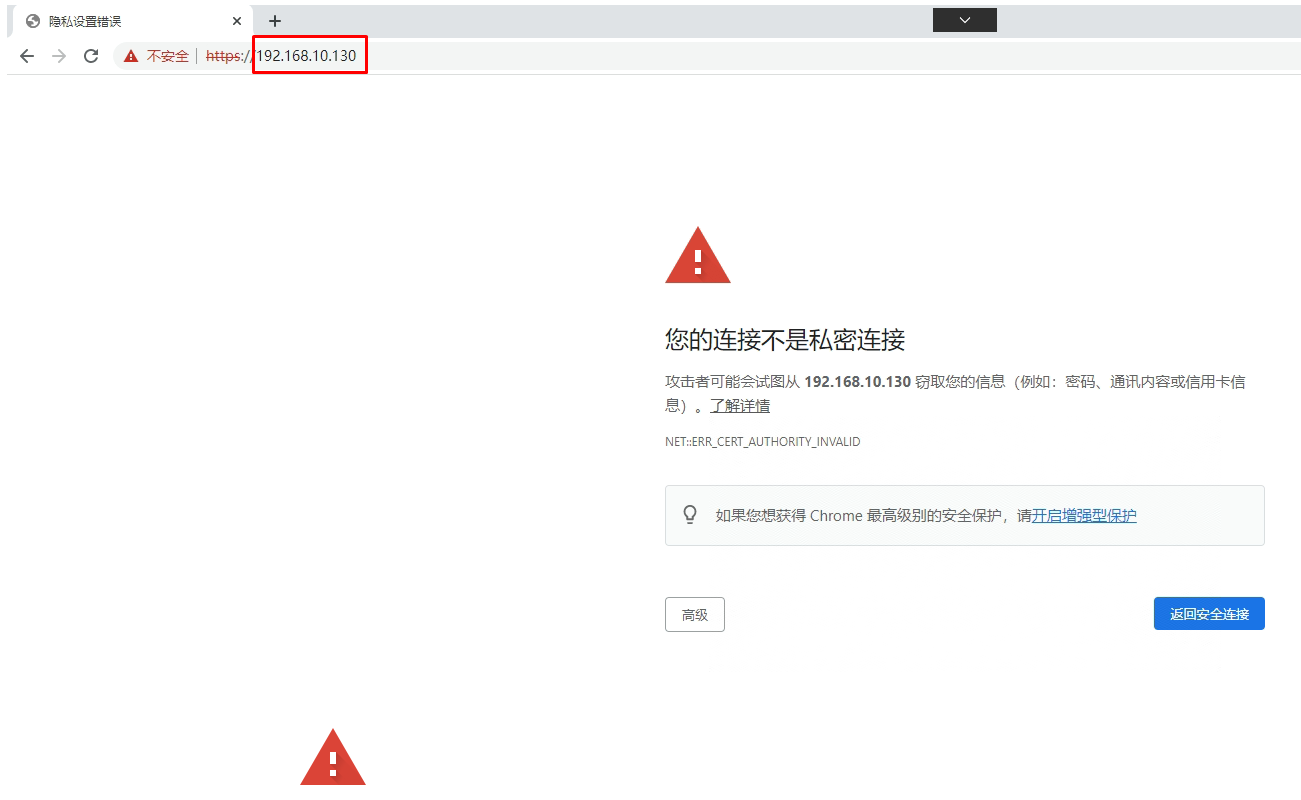

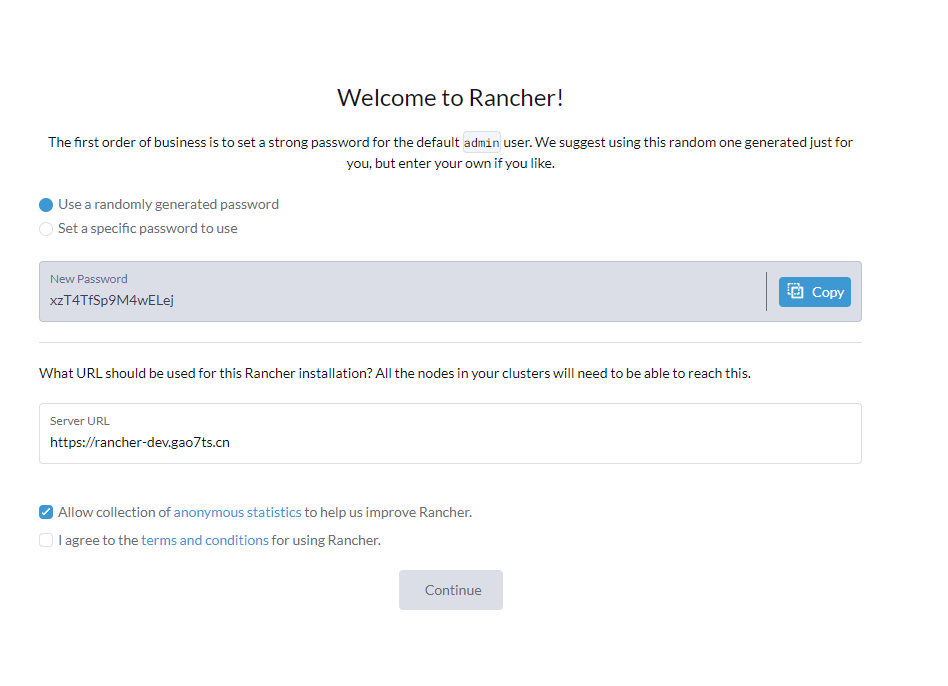

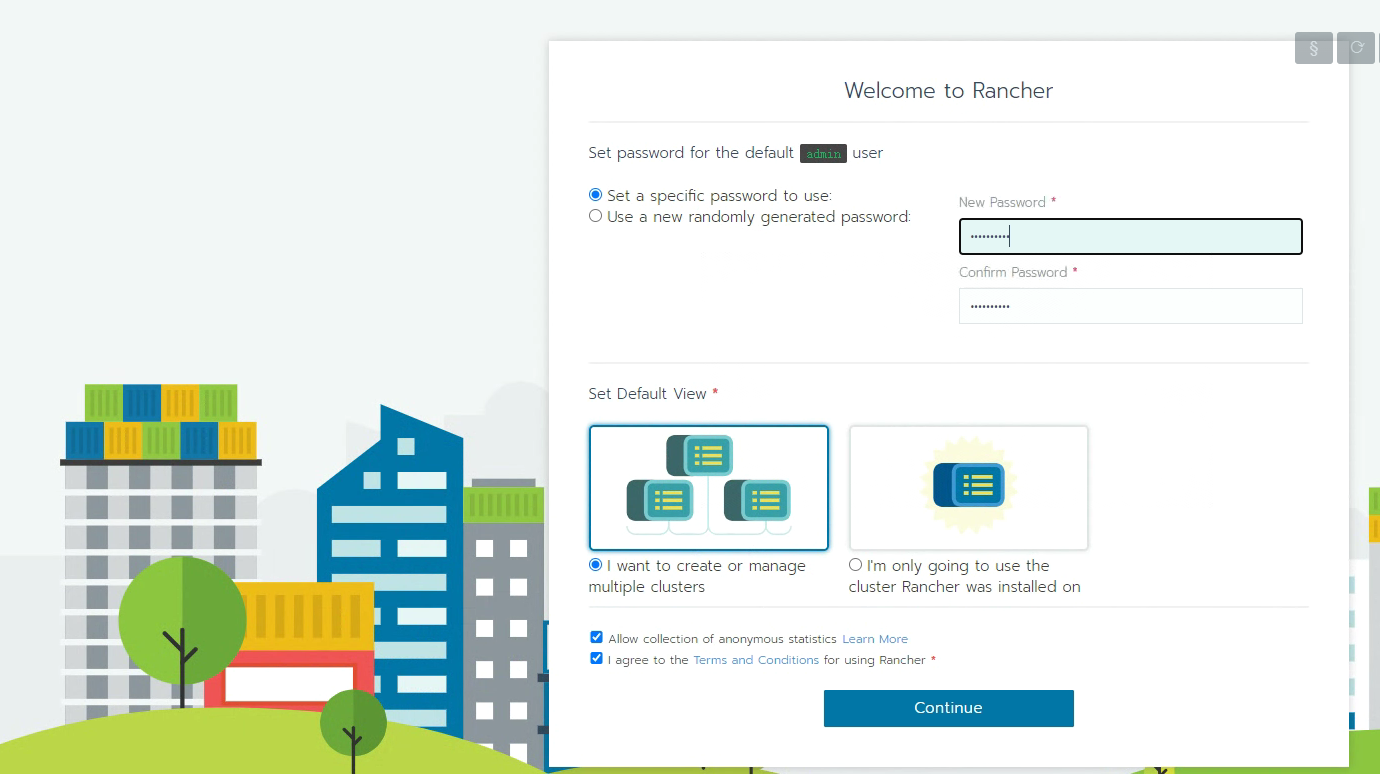

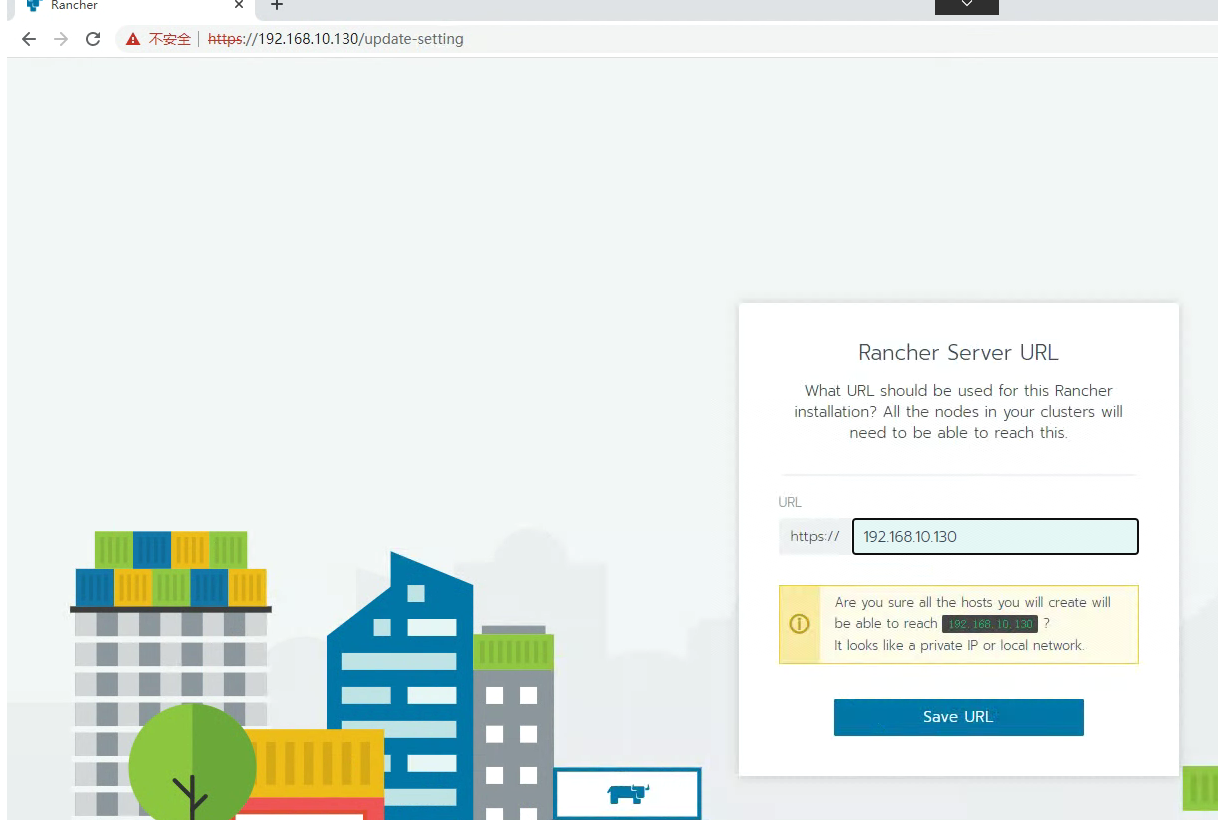

五、通过Rancher部署kubernetes集群 5.1 Rancher访问

最好是给他配置个证书

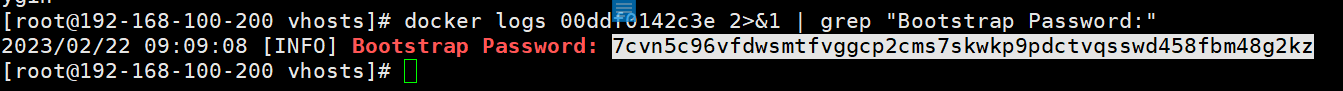

1 docker logs container-id 2>&1 | grep "Bootstrap Password:"

本次密码为Kubemsb123

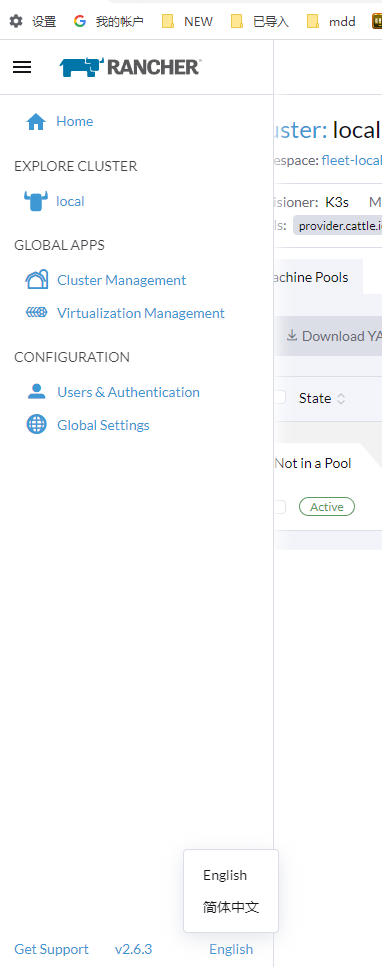

设置中文

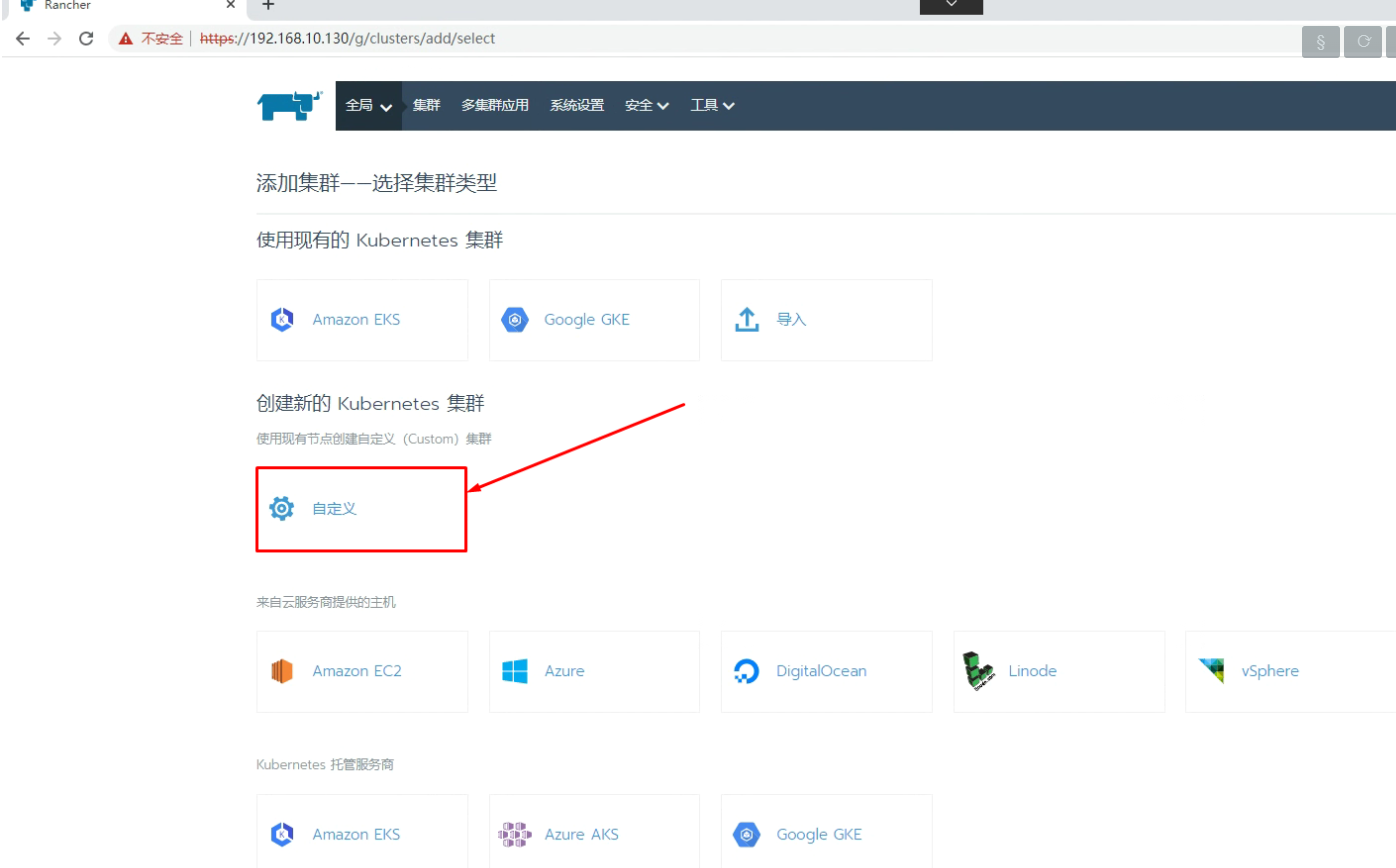

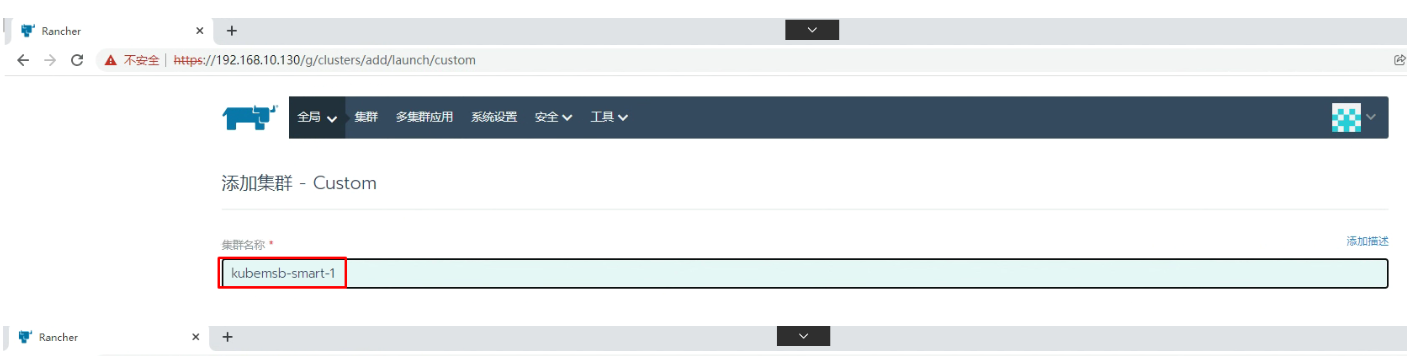

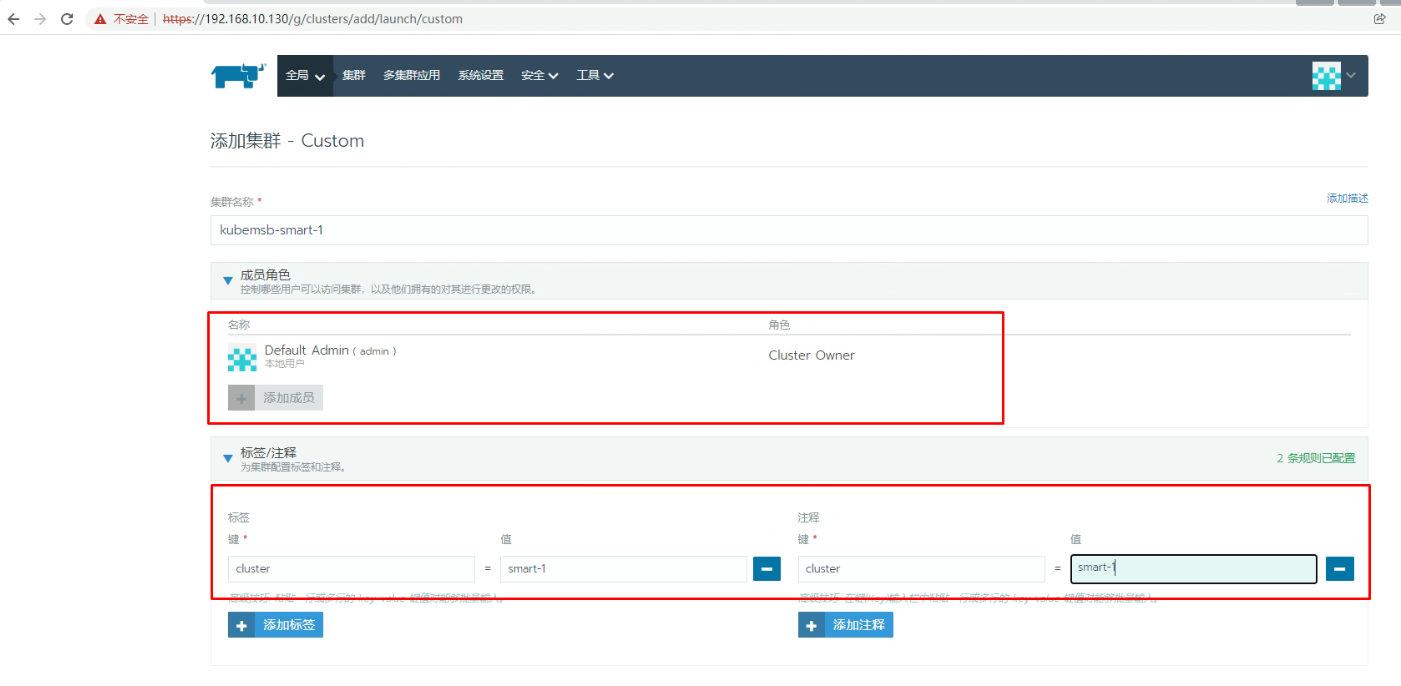

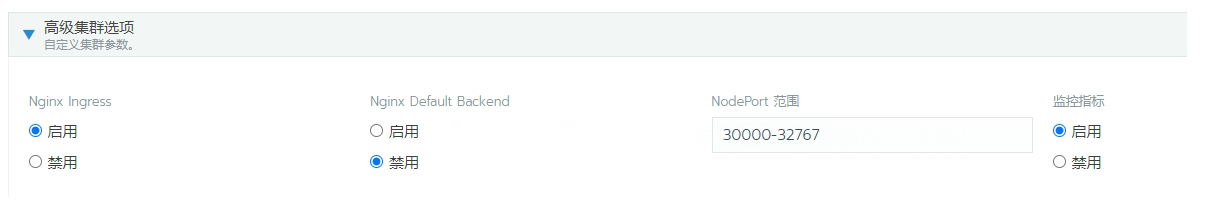

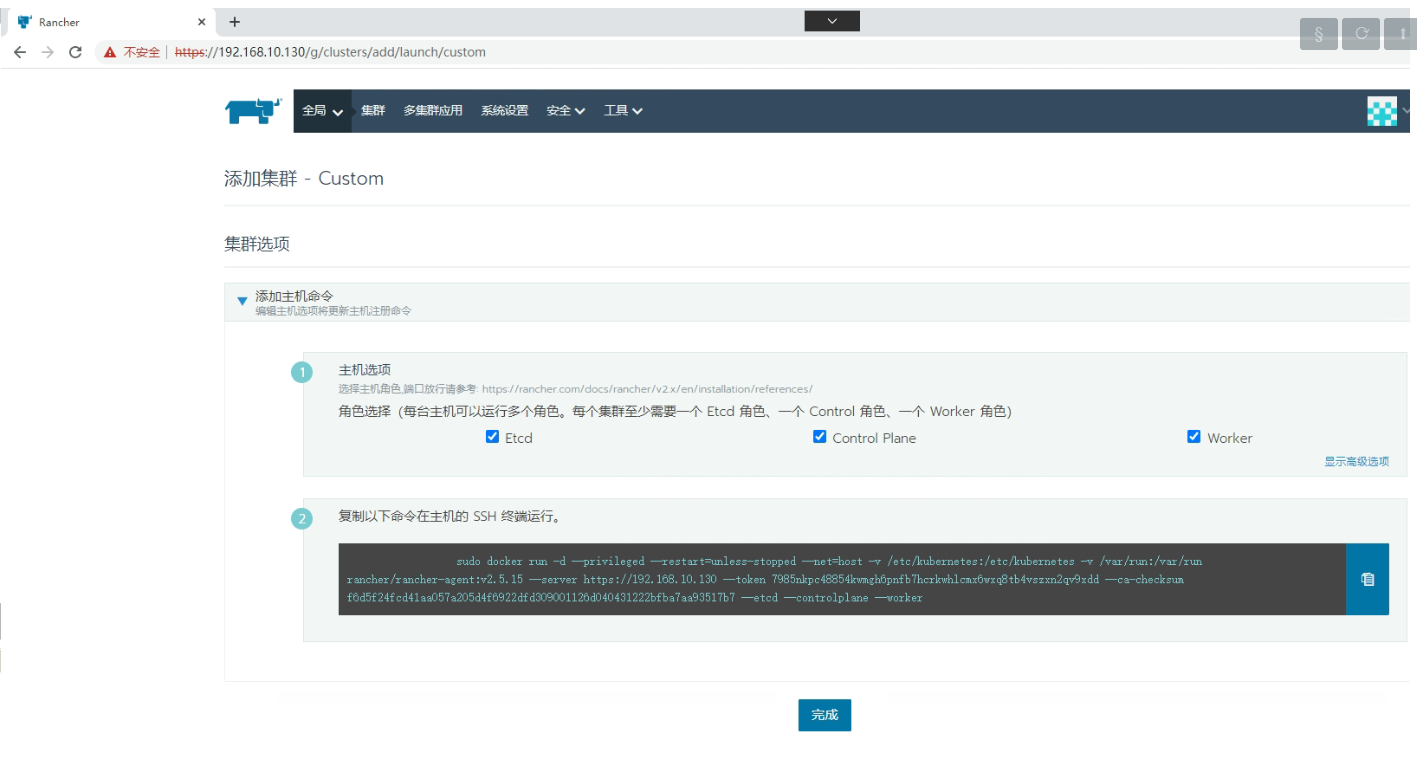

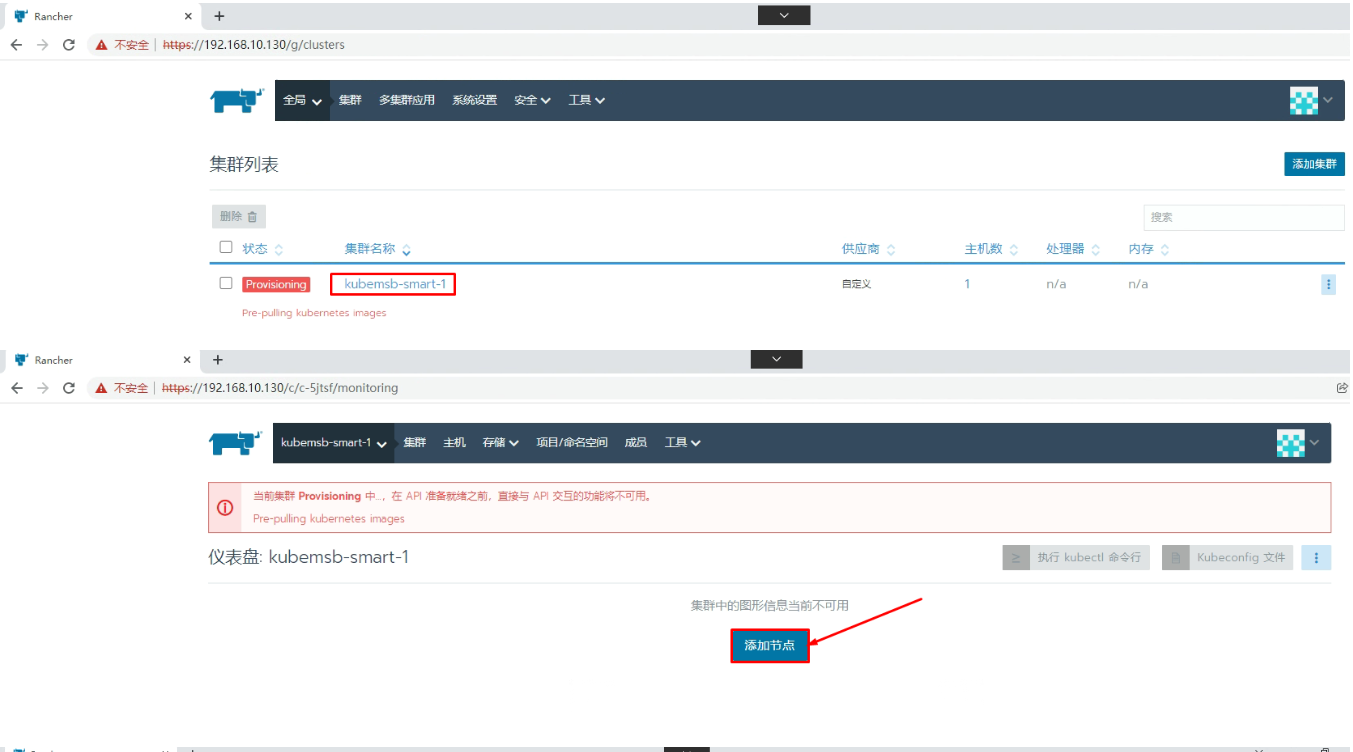

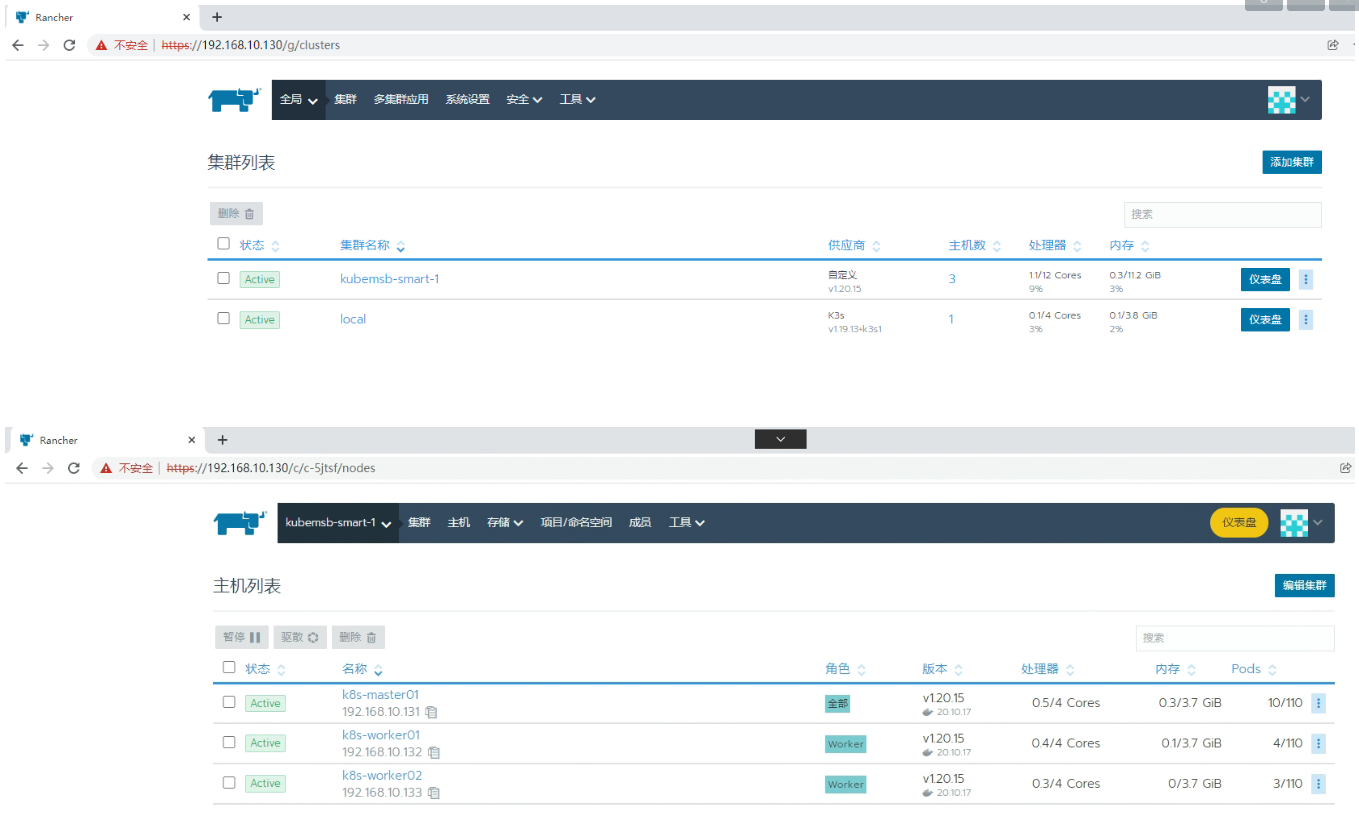

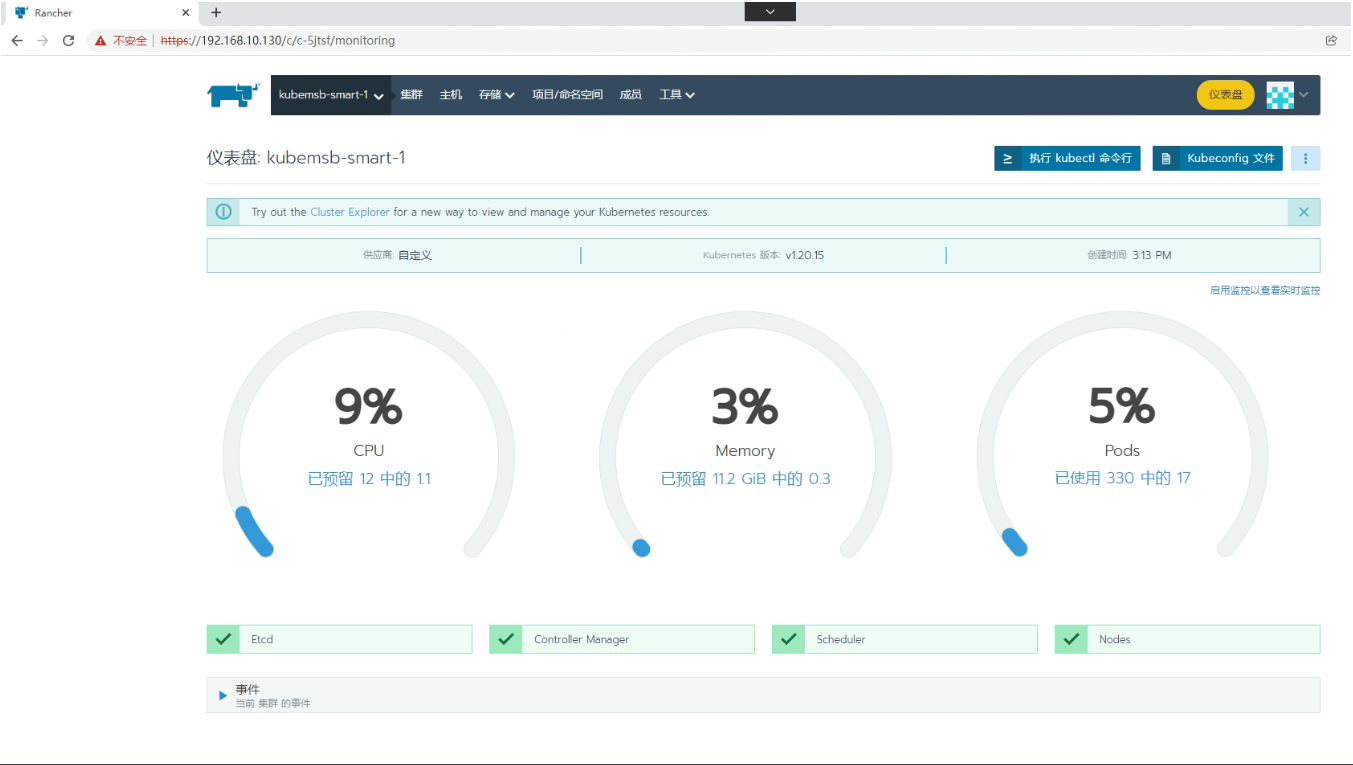

5.2 通过Rancher创建Kubernetes集群

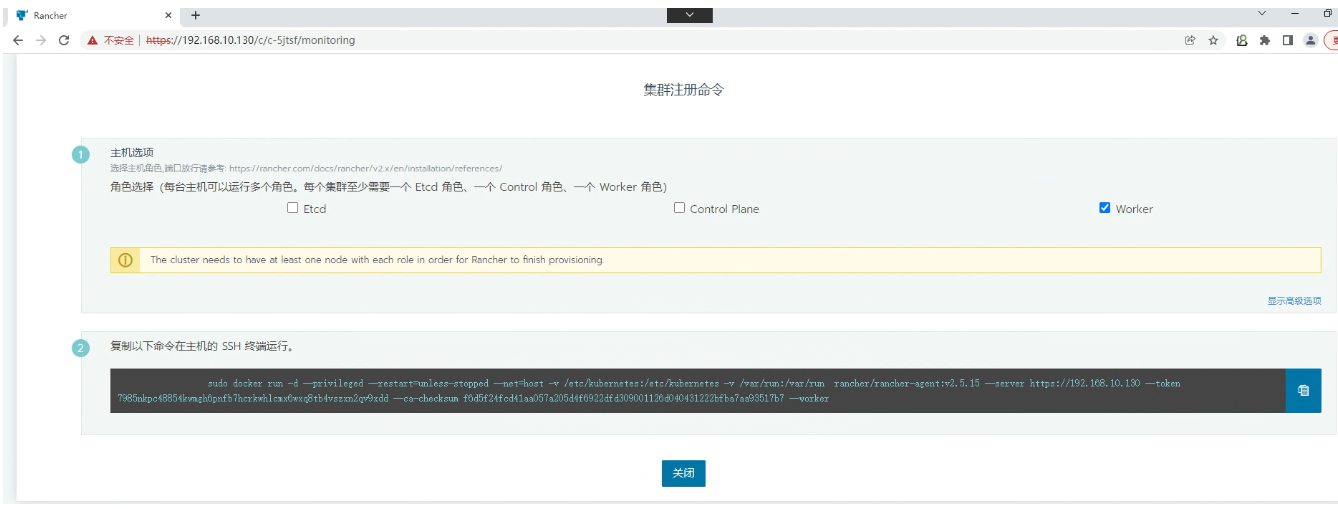

注意:并在配置了 kubeconfig 的节点上运行该命令 一般情况下,第一个节点都是主控制器,也叫master Server节点上,执行命令

1 2 3 4 5 [root@k8s-master01 ~] [root@k8s-master01 ~] CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 8e7e73b477dc rancher/rancher-agent:v2.5.15 "run.sh --server htt…" 20 seconds ago Up 18 seconds brave_ishizaka

添加 worker节点

1 2 3 [root@k8s-worker01 ~] [root@k8s-worker02 ~]

所有节点激活后状态

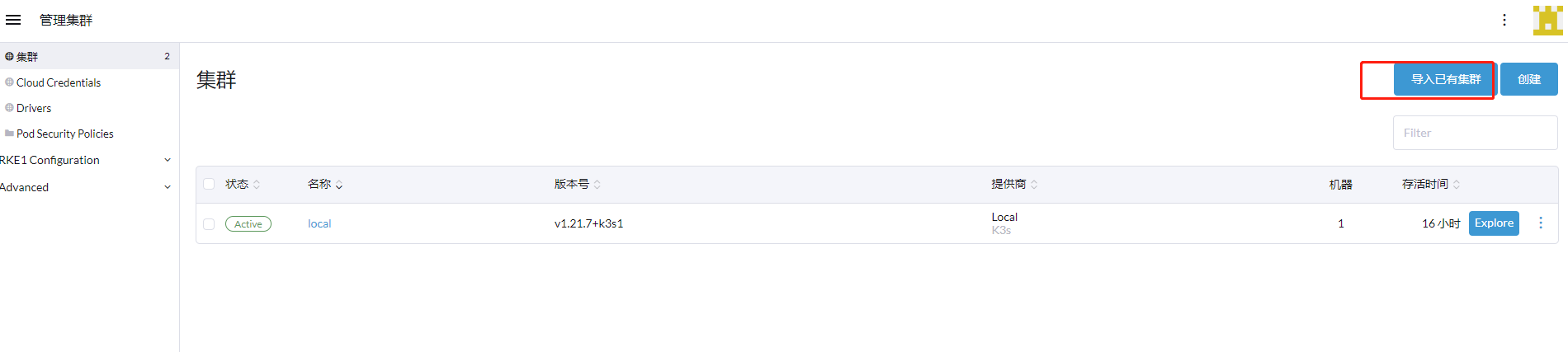

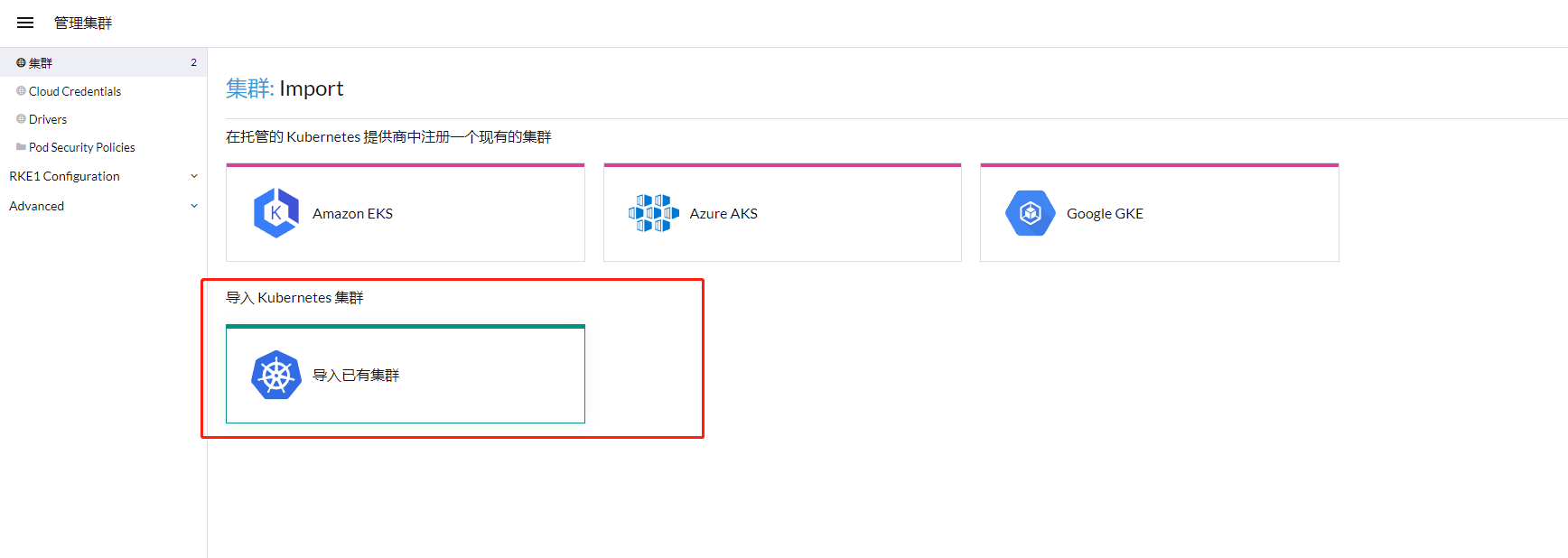

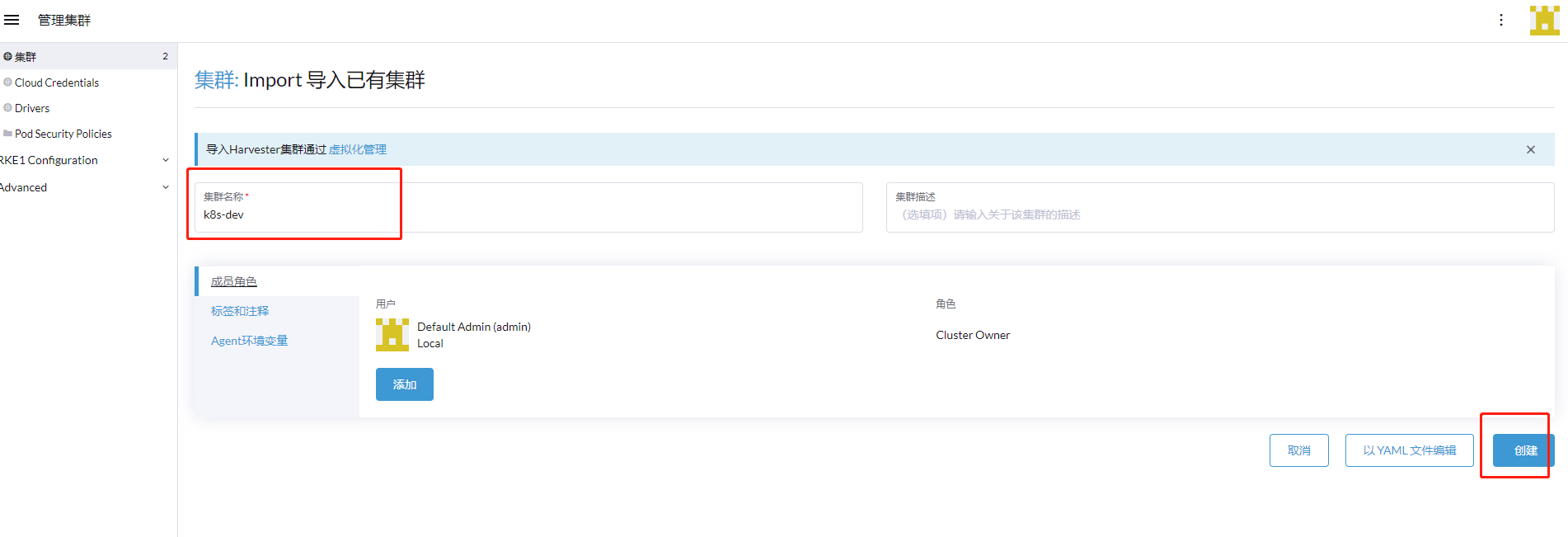

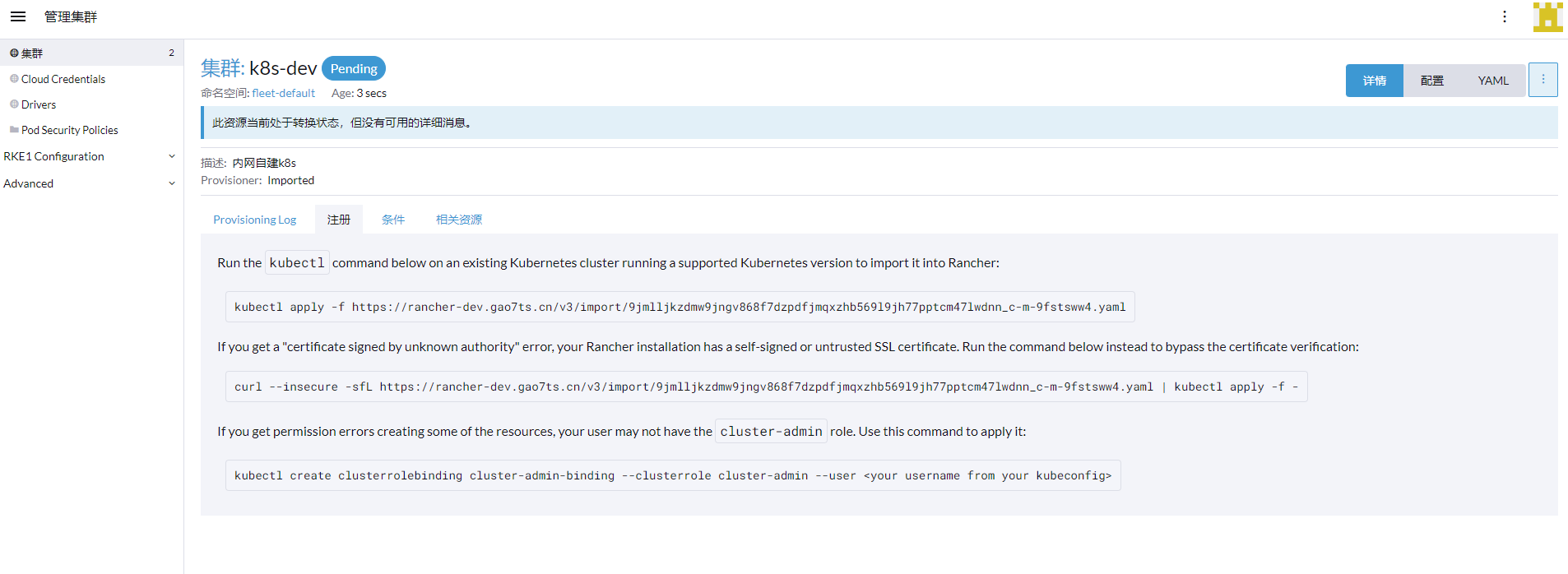

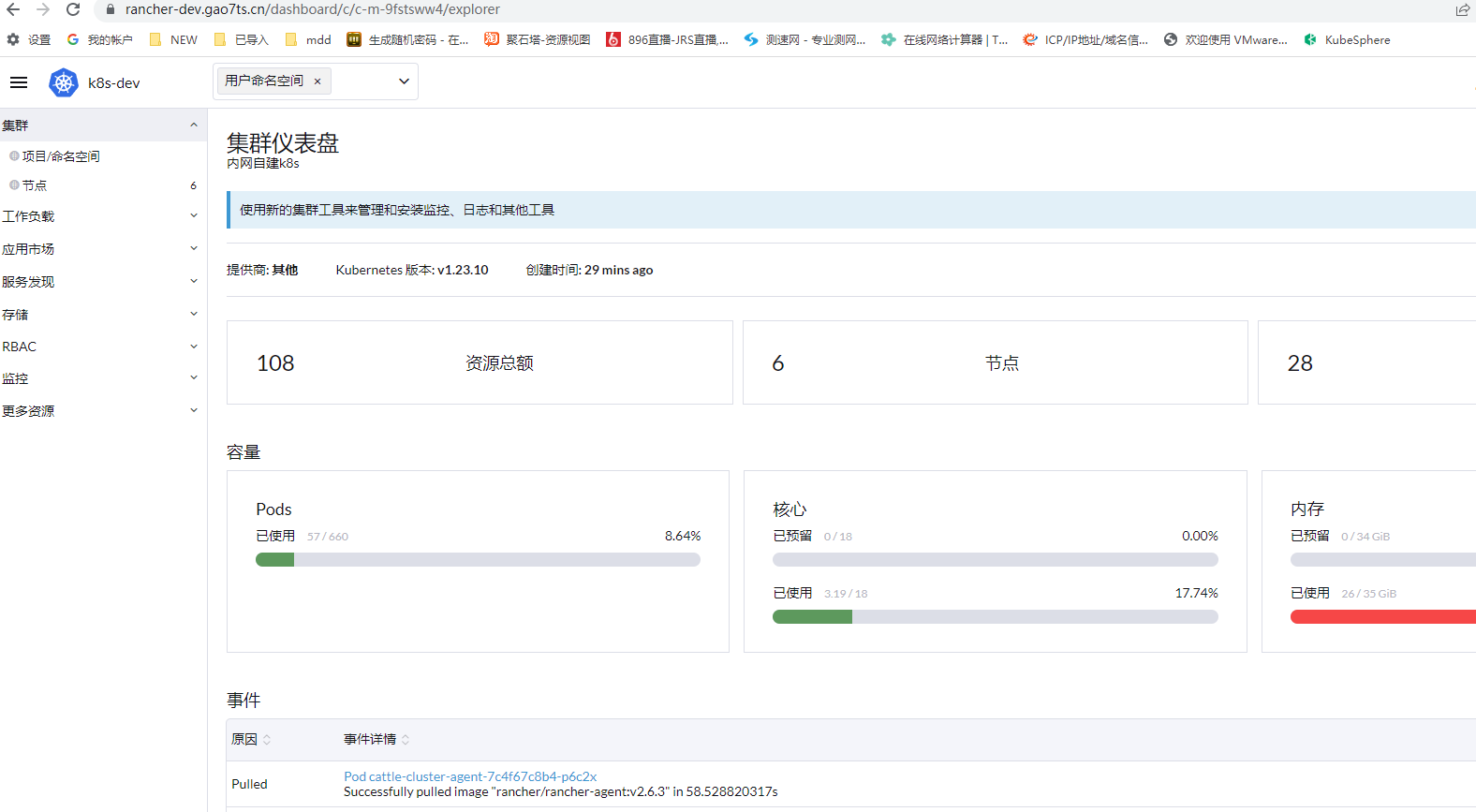

六、导入已有集群

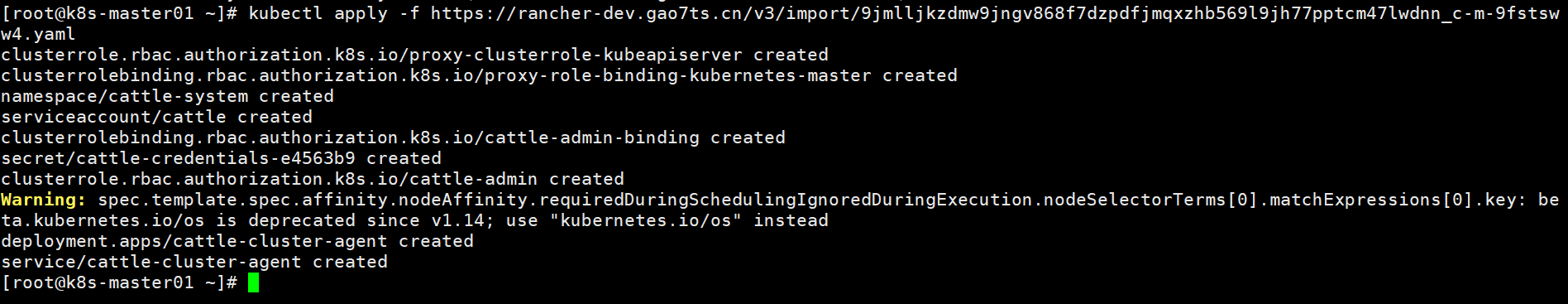

将K8S集群导入Rancher:(master 执行)

1 kubectl apply -f https://rancher-dev.gao7ts.cn/v3/import/9jmlljkzdmw9jngv868f7dzpdfjmqxzhb569l9jh77pptcm47lwdnn_c-m-9fstsww4.yaml

1 curl --insecure -sfL https://rancher-dev.gao7ts.cn/v3/import/9jmlljkzdmw9jngv868f7dzpdfjmqxzhb569l9jh77pptcm47lwdnn_c-m-9fstsww4.yaml | kubectl apply -f -

如果在创建某些资源时遇到权限错误,则用户可能没有群集管理员角色。使用此命令应用它:

1 kubectl create clusterrolebinding cluster-admin-binding --clusterrole cluster-admin --user <your username from your kubeconfig>

等待

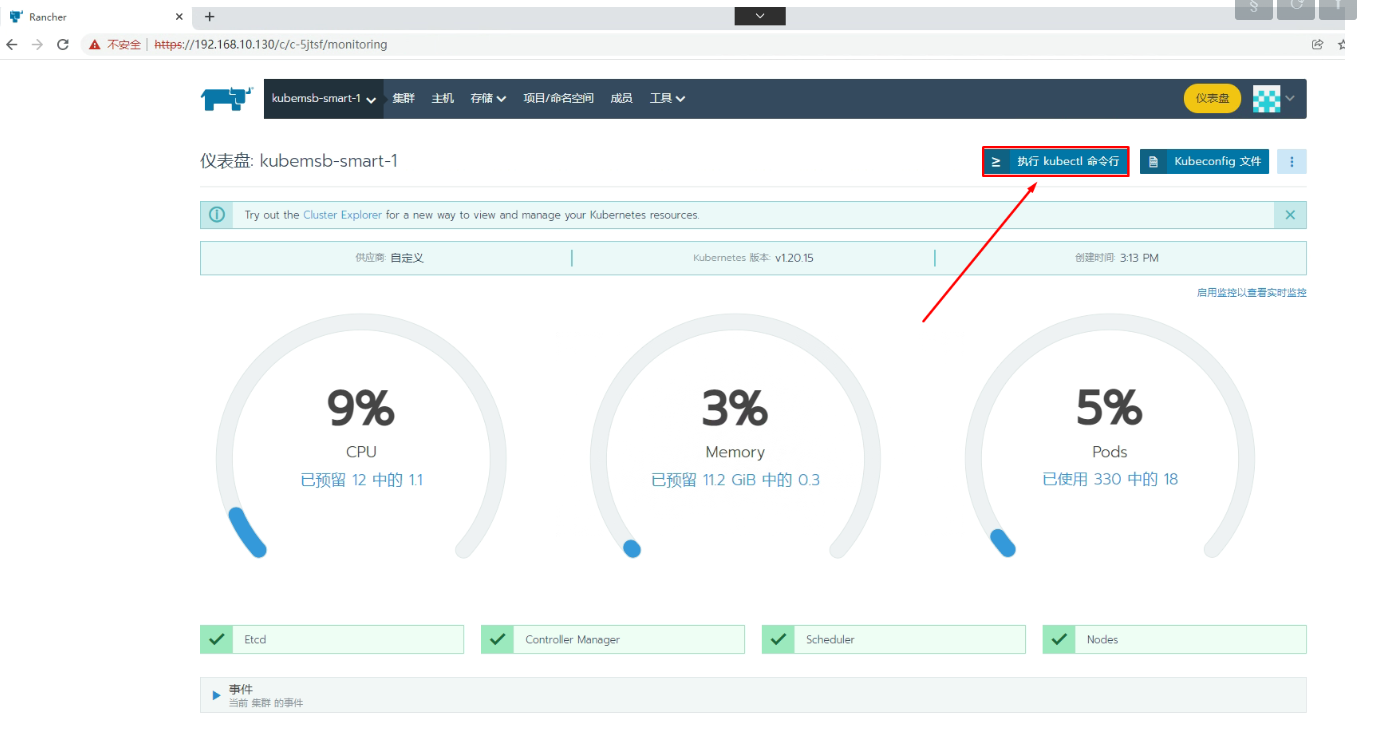

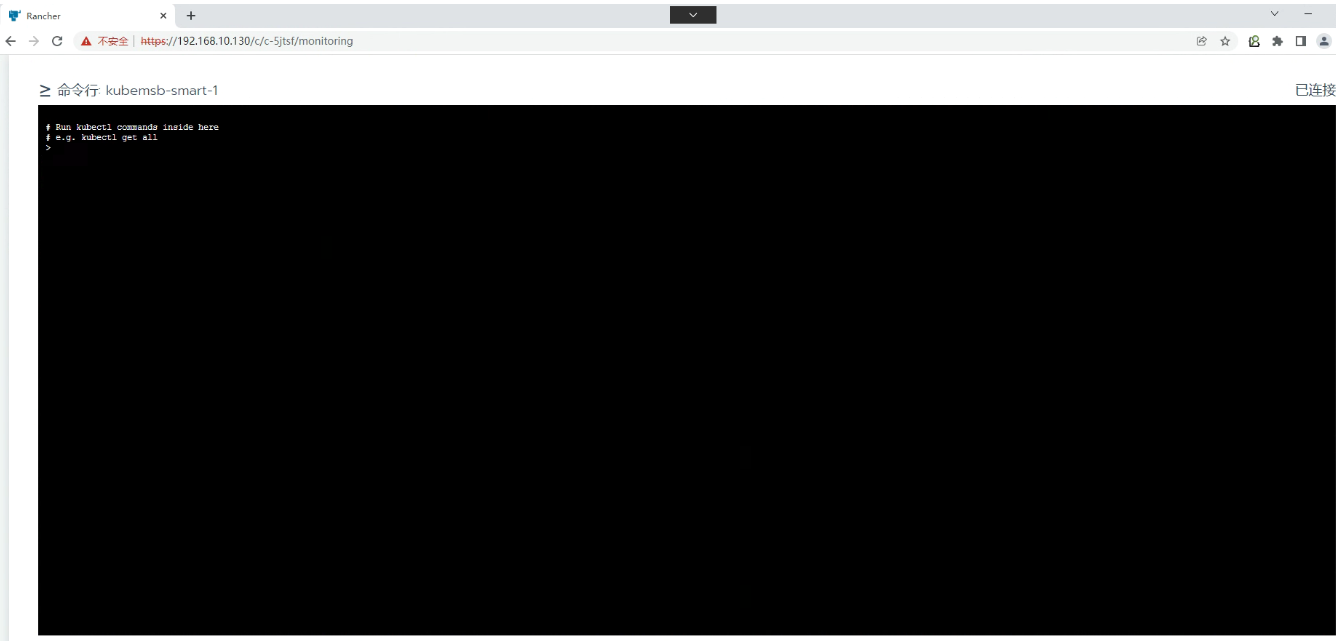

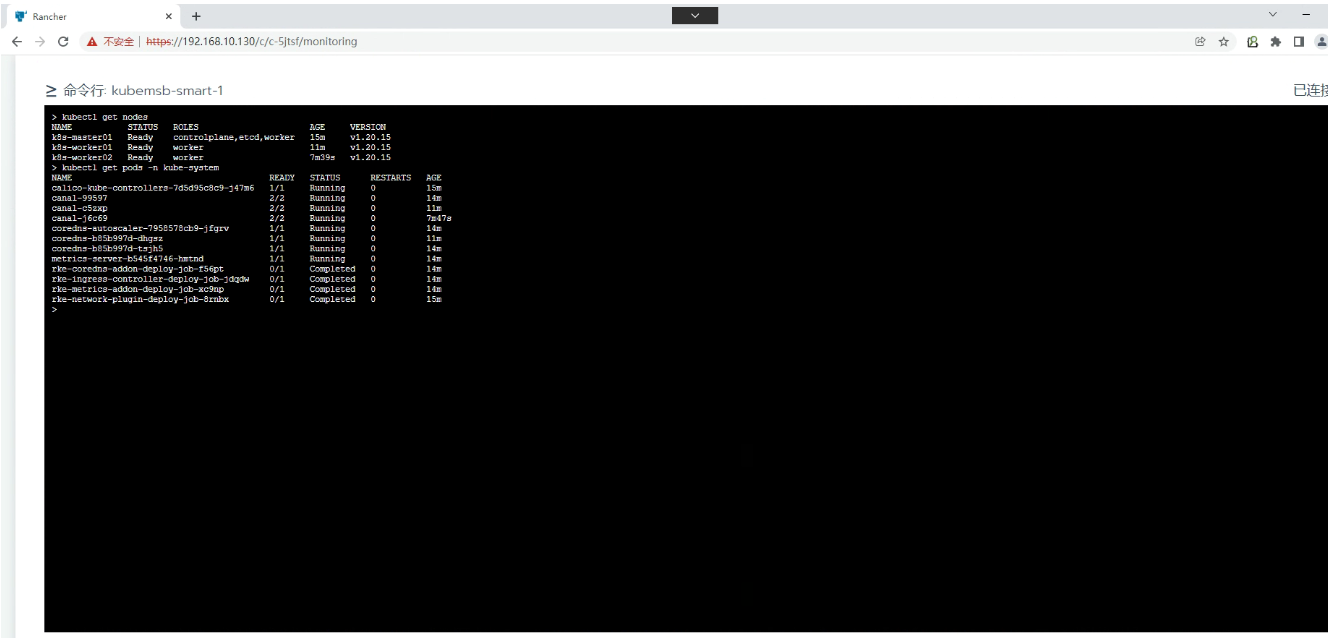

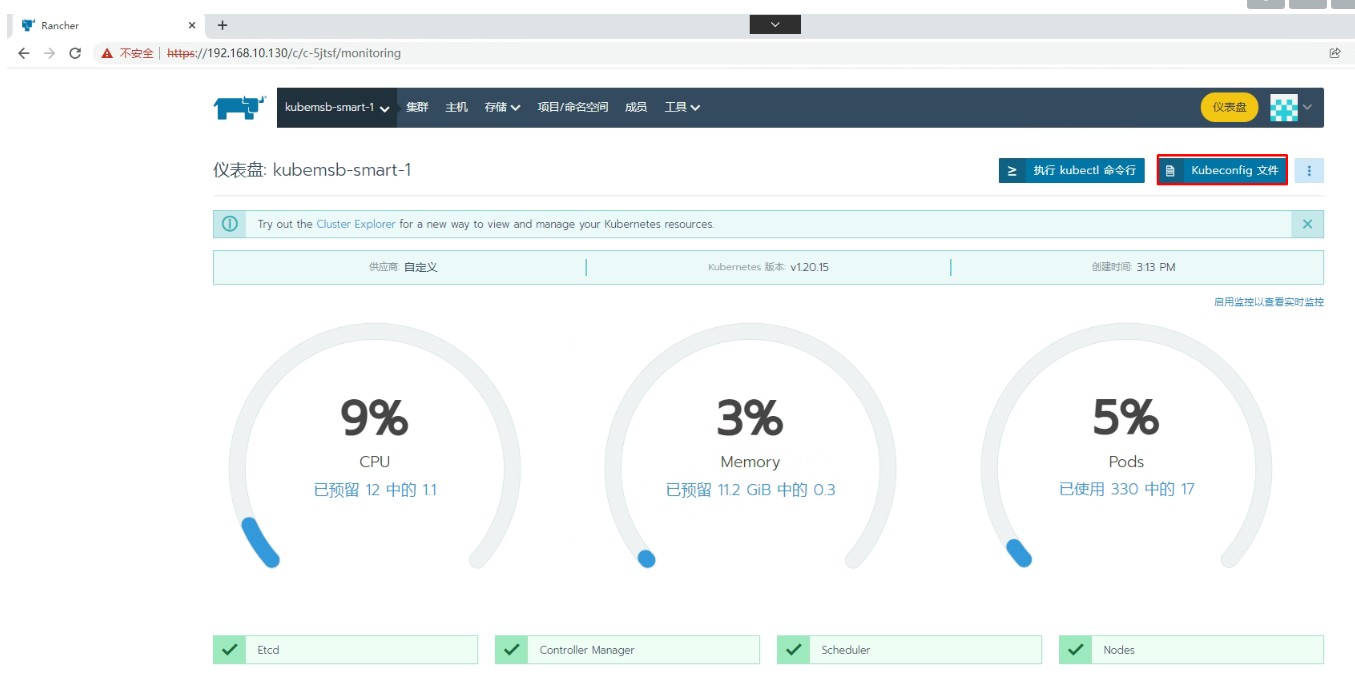

七、配置通过命令行访问Kubernetes集群

在集群节点命令行,如果访问呢?

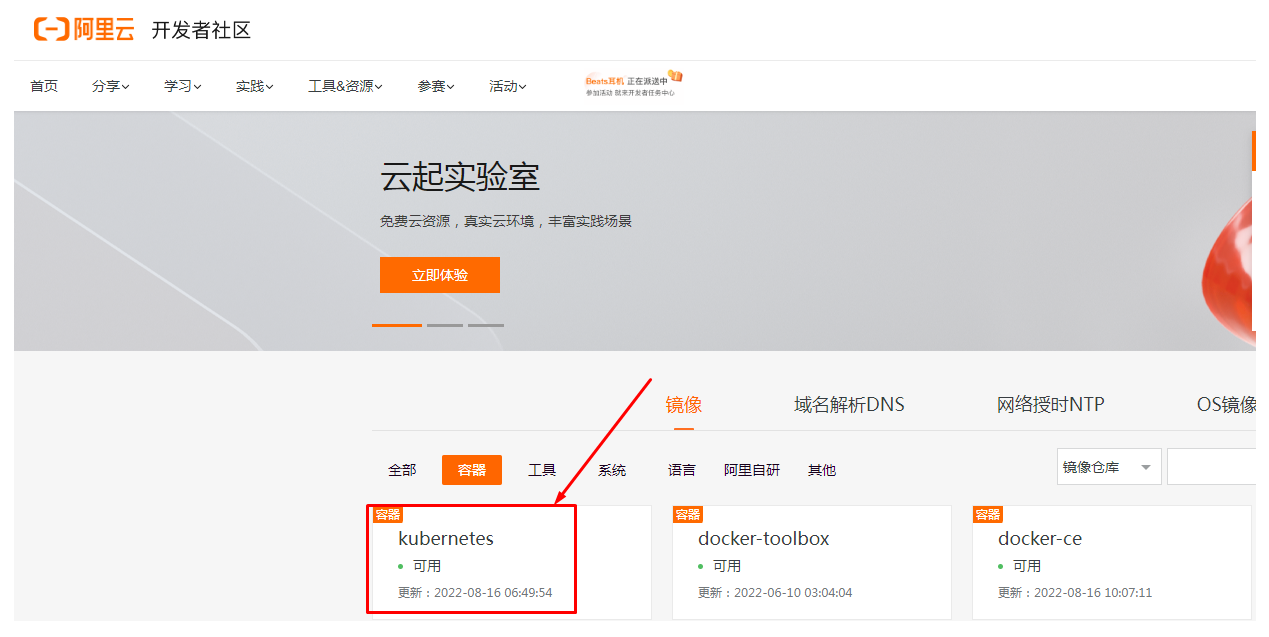

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 [root@k8s-master01 ~] [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF [root@k8s-master01 ~] [root@k8s-master01 ~] [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg [root@k8s-master01 ~] [root@k8s-master01 ~]

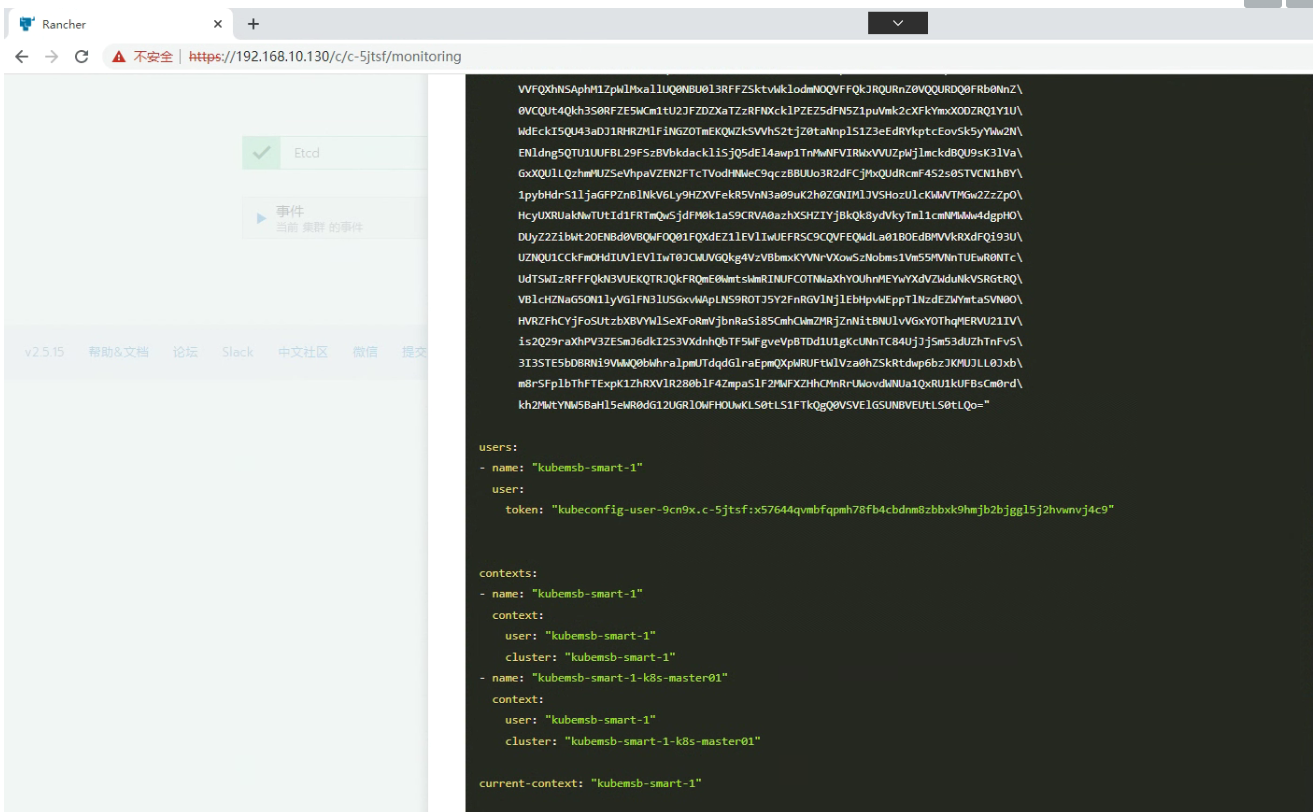

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 [root@k8s-master01 ~] [root@k8s-master01 ~] apiVersion: v1 kind: Config clusters: - name: "kubemsb-smart-1" cluster: server: "https://192.168.10.130/k8s/clusters/c-5jtsf" certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJwekNDQ\ VUyZ0F3SUJBZ0lCQURBS0JnZ3Foa2pPUFFRREFqQTdNUnd3R2dZRFZRUUtFeE5rZVc1aGJXbGoKY\ kdsemRHVnVaWEl0YjNKbk1Sc3dHUVlEVlFRREV4SmtlVzVoYldsamJHbHpkR1Z1WlhJdFkyRXdIa\ GNOTWpJdwpPREUyTURZMU9UVTBXaGNOTXpJd09ERXpNRFkxT1RVMFdqQTdNUnd3R2dZRFZRUUtFe\ E5rZVc1aGJXbGpiR2x6CmRHVnVaWEl0YjNKbk1Sc3dHUVlEVlFRREV4SmtlVzVoYldsamJHbHpkR\ 1Z1WlhJdFkyRXdXVEFUQmdjcWhrak8KUFFJQkJnZ3Foa2pPUFFNQkJ3TkNBQVJMbDZKOWcxMlJQT\ G93dnVHZkM0YnR3ZmhHUDBpR295N1U2cjBJK0JZeAozZCtuVDBEc3ZWOVJWV1BDOGZCdGhPZmJQN\ GNYckx5YzJsR081RHkrSXRlM28wSXdRREFPQmdOVkhROEJBZjhFCkJBTUNBcVF3RHdZRFZSMFRBU\ UgvQkFVd0F3RUIvekFkQmdOVkhRNEVGZ1FVNnZYWXBRYm9IdXF0UlBuS1FrS3gKMjBSZzJqMHdDZ\ 1lJS29aSXpqMEVBd0lEU0FBd1JRSWdMTUJ6YXNDREU4T2tCUk40TWRuZWNRU0xjMFVXQmNseApGO\ UFCem1MQWQwb0NJUUNlRWFnRkdBa1ZsUnV1czllSE1VRUx6ODl6VlY5L096b3hvY1ROYnA5amlBP\ T0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQ==" - name: "kubemsb-smart-1-k8s-master01" cluster: server: "https://192.168.10.131:6443" certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM0VENDQ\ WNtZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFTTVJBd0RnWURWUVFERXdkcmRXSmwKT\ FdOaE1CNFhEVEl5TURneE5qQTNNVFV3TmxvWERUTXlNRGd4TXpBM01UVXdObG93RWpFUU1BNEdBM\ VVFQXhNSAphM1ZpWlMxallUQ0NBU0l3RFFZSktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ\ 0VCQUt4Qkh3S0RFZE5WCm1tU2JFZDZXaTZzRFNXcklPZEZ5dFN5Z1puVmk2cXFkYmxXODZRQ1Y1U\ WdEckI5QU43aDJ1RHRZMlFiNGZOTmEKQWZkSVVhS2tjZ0taNnplS1Z3eEdRYkptcEovSk5yYWw2N\ ENldng5QTU1UUFBL29FSzBVbkdackliSjQ5dEl4awp1TnMwNFVIRWxVVUZpWjlmckdBQU9sK3lVa\ GxXQUlLQzhmMUZSeVhpaVZEN2FTcTVodHNWeC9qczBBUUo3R2dFCjMxQUdRcmF4S2s0STVCN1hBY\ 1pybHdrS1ljaGFPZnBlNkV6Ly9HZXVFekR5VnN3a09uK2h0ZGNIMlJVSHozUlcKWWVTMGw2ZzZpO\ HcyUXRUakNwTUtId1FRTmQwSjdFM0k1aS9CRVA0azhXSHZIYjBkQk8ydVkyTml1cmNMWWw4dgpHO\ DUyZ2ZibWt2OENBd0VBQWFOQ01FQXdEZ1lEVlIwUEFRSC9CQVFEQWdLa01BOEdBMVVkRXdFQi93U\ UZNQU1CCkFmOHdIUVlEVlIwT0JCWUVGQkg4VzVBbmxKYVNrVXowSzNobms1Vm55MVNnTUEwR0NTc\ UdTSWIzRFFFQkN3VUEKQTRJQkFRQmE0WmtsWmRINUFCOTNWaXhYOUhnMEYwYXdVZWduNkVSRGtRQ\ VBlcHZNaG5ON1lyVGlFN3lUSGxvWApLNS9ROTJ5Y2FnRGVlNjlEbHpvWEppTlNzdEZWYmtaSVN0O\ HVRZFhCYjFoSUtzbXBVYWlSeXFoRmVjbnRaSi85CmhCWmZMRjZnNitBNUlvVGxYOThqMERVU21IV\ is2Q29raXhPV3ZESmJ6dkI2S3VXdnhQbTF5WFgveVpBTDd1U1gKcUNnTC84UjJjSm53dUZhTnFvS\ 3I3STE5bDBRNi9VWWQ0bWhralpmUTdqdGlraEpmQXpWRUFtWlVza0hZSkRtdwp6bzJKMUJLL0Jxb\ m8rSFplbThFTExpK1ZhRXVlR280blF4ZmpaSlF2MWFXZHhCMnRrUWovdWNUa1QxRU1kUFBsCm0rd\ kh2MWtYNW5BaHl5eWR0dG12UGRlOWFHOUwKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=" users :- name: "kubemsb-smart-1" user: token: "kubeconfig-user-9cn9x.c-5jtsf:x57644qvmbfqpmh78fb4cbdnm8zbbxk9hmjb2bjggl5j2hvwnvj4c9" contexts: - name: "kubemsb-smart-1" context: user: "kubemsb-smart-1" cluster: "kubemsb-smart-1" - name: "kubemsb-smart-1-k8s-master01" context: user: "kubemsb-smart-1" cluster: "kubemsb-smart-1-k8s-master01" current-context: "kubemsb-smart-1"

1 2 3 4 5 [root@k8s-master01 ~] NAME STATUS ROLES AGE VERSION k8s-master01 Ready controlplane,etcd,worker 35m v1.20.15 k8s-worker01 Ready worker 31m v1.20.15 k8s-worker02 Ready worker 27m v1.20.15

rancher最大的一个坑就是证书的有效期只有一年,运行一年后会出现下面的日志

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 [info] Waiting on etcd startup: Get [https://localhost:2379/health](https://localhost:2379/health): x509: certificate has expired or is not yet valid ```的错误 然后ui无法登录,重启后整个rancher就挂了,翻了翻官方资料,各种升级更新感觉很繁琐,远不如重新再装一个来的方便,后来经过测试,直接把/var/lib/rancher/k3s/server/tls/下已过期的证书(.crt和.key)删掉,大概有14个,也可以生成新的证书,解决过期问题 清理脚本 ```bash docker stop $(docker ps -aq) docker system prune -f docker volume rm $(docker volume ls -q) docker image rm $(docker image ls -q) rm -rf /etc/ceph /etc/cni /etc/kubernetes /opt/cni /opt/rke /run/secrets/kubernetes.io /run/calico /run/flannel /var/lib/calico /var/lib/etcd /var/lib/cni /var/lib/kubelet /var/lib/rancher/rke/log /var/log/containers /var/log/pods /var/run/calico